Software Systems Will Fail

Gitlab had a very public outage last month. Most companies provide some kind of explanation when their services are interrupted. Those are usually sanitized (or seem sanitized) to make things seem better than they actually are. Gitlab instead provided an extremely detailed report of the incident as well as all the things they know they could be better at.

Some of my friends were extremely troubled by the report. They believed that Gitlab has shown extreme incompetence and can’t be trusted by their customers. Gitlab should close up shop and help migrate their clients to a different service.

I disagree with this position. While Gitlab did describe a lot of poor practices that resulted in their outage, there is some room for forgiveness. The outage had a lot of prerequisites, but it culminated in a developer performing an operation on the wrong machine.

Sounds horrible. And it is. But let’s look at what the work probably looked like:

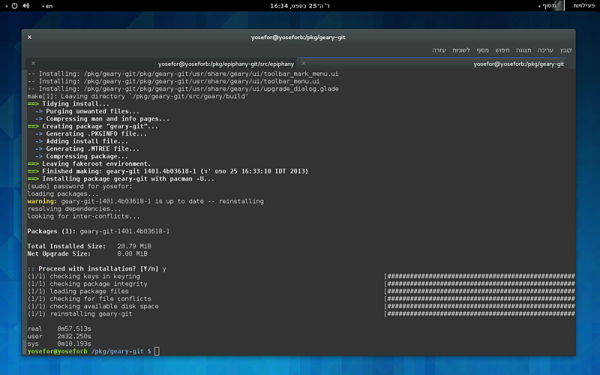

The developer probably had a few of those windows open. All text. No images. Not exactly an abundance of visual cues to make sure the correct operation is being done.

If you think a developer is above making a mistake like this:

Have you ever accidentally hit reply all on a snarky email about your boss and sent it TO your boss?

Have you ever accidentally friended an Ex on Facebook?

Have you ever sent a chat meant for your friend to your parents?

Have you ever deleted the wrong file from your computer?

Humans make mistakes. We’re imperfect creatures.

Most engineering organizations take steps to account for human error. The most critical operations should have safeguards just to make sure a human doesn’t accidentally do something they don’t mean to do. It’s easy to criticize a team for not having these safeguards.

But how do you learn what safeguards to put in place? You can read about them. Yet have you ever become an expert in something by reading about it? Probably not. Expertise requires experience in most professions. Managing software systems is no different. Knowing what safeguards are worthwhile and how to implement them properly requires experience.

All software systems go down at some point. Even the biggest companies in the world with some of the best developers in the world have issues. Gmail had an outage last Fall. Twitter used to go down every other day in the early days. Even Amazon, who many companies rely on to host their web applications, has gone down multiple times. If you read the post mortem on their most recent outage, they were using established procedures so they encountered issues even with safeguards in place. The outage was effectively caused by a typo.

A typo.

A typo took down a huge chunk of internet services that rely on Amazon. Even a company as large and skilled as Amazon didn’t find the right mix of safeguards to perfectly protect against human error.

While it’s nice to have the goal of a web application that’s up 100% of the time, it simply isn’t realistic. What’s just as important as trying to keep a web application up is how you handle what happens when the application goes down and what you learn from the experience. Gitlab didn’t handle their outage very well, but they do seem to have learned a lot from the incident. Everyone needs a chance to get that learning experience. Individual people need it, but so do organizations.

I keep this blog around for posterity, but have since moved on. An explanation can be found here

I still write though and if you'd like to read my more recent work, feel free to subscribe to my substack.